INDUSTRY

New organisation to tackle AI issues

Fake images and generative AI: The US AI Safety Institute Consortium, AISIC, is a group of over 200 companies including Adobe, Apple, Microsoft, OpenAI, Nvidia, and Meta, which will now discuss the development of AI.

MEST LÄST JUST NU

MEST LÄST JUST NU

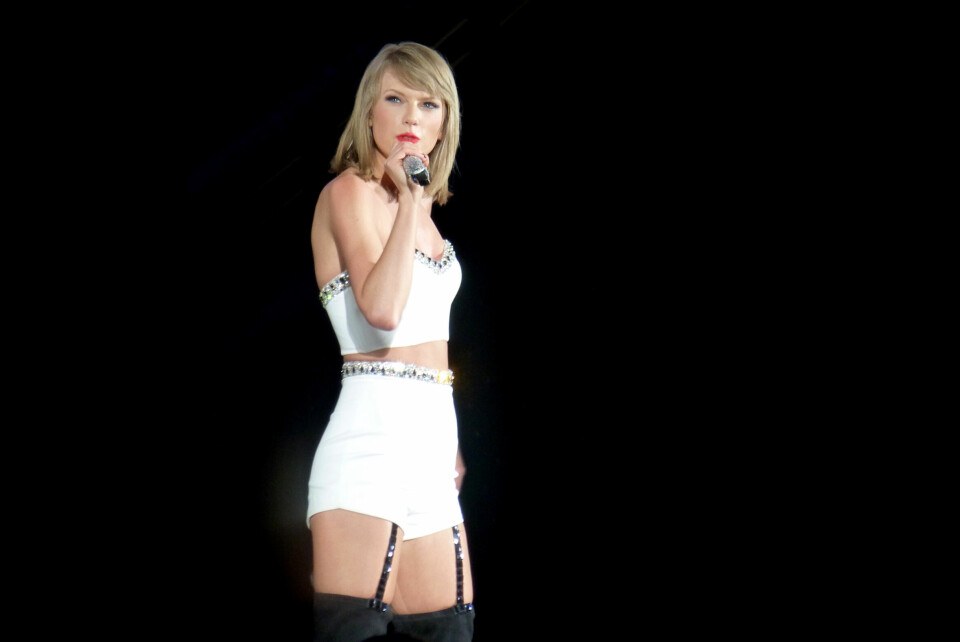

The exponential development of AI gives rise to a number of problems, something that Kamera & Bild has consistently reported on. Images and videos can be created through sentences of text and can be used for wrongful purposes.

Now, over 200 organizations and companies working with AI, including Adobe, Apple, Microsoft, OpenAI, Nvidia, and Meta, have joined the new organization "US AI Safety Institute Consortium", abbreviated AISIC, to sort out the problems with AI.

The participating companies will develop guidelines and identify different aspects of AI development, such as how it can be used for wrongful purposes, used safely – but also how material such as images and videos created by generative AI can be properly marked. As an example of the next level in development, Google's new generative AI engine "Lumiere" is mentioned, which can create lifelike video from text.

AISIC will be under the National Institute of Standards and Technology, NIST.

LÄS ÄVEN

-

Fotografiet som sanningsvittne – seminarium om bild & verklighet

-

Sanna Sjöswärd årets Livonia Print-stipendiat för "Two Faces"

-

Fujifilm Instax Mini Link+ ger möjlighet till kreativa utskrifter

-

Från Mörkrum till Casino: Hur Fotografering Blev en Fråga om Timing

-

World Sports Photography Awards 2026 avgjord – tennisbild vinner

-

Ricoh GR IV Monochrome – specialversion för gatufotografen

LÄS ÄVEN

-

Fotografiet som sanningsvittne – seminarium om bild & verklighet

-

Sanna Sjöswärd årets Livonia Print-stipendiat för "Two Faces"

-

Fujifilm Instax Mini Link+ ger möjlighet till kreativa utskrifter

-

Från Mörkrum till Casino: Hur Fotografering Blev en Fråga om Timing

-

World Sports Photography Awards 2026 avgjord – tennisbild vinner

-

Ricoh GR IV Monochrome – specialversion för gatufotografen